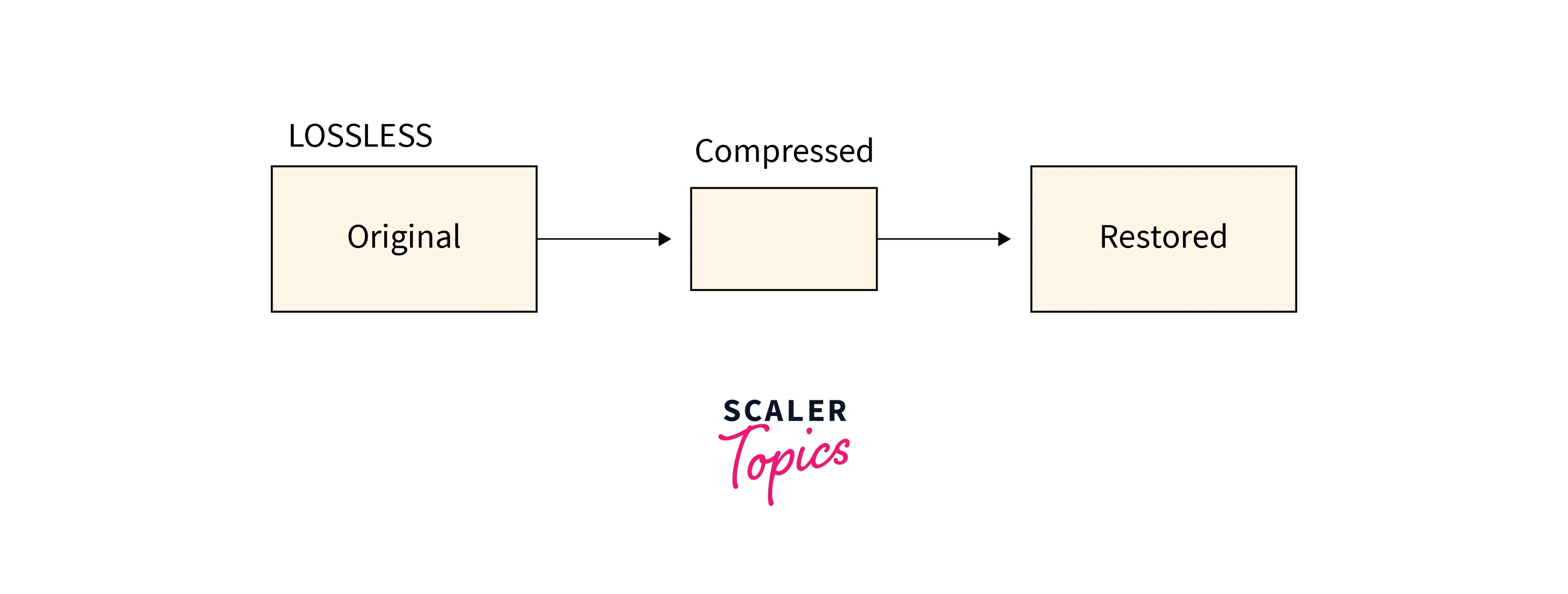

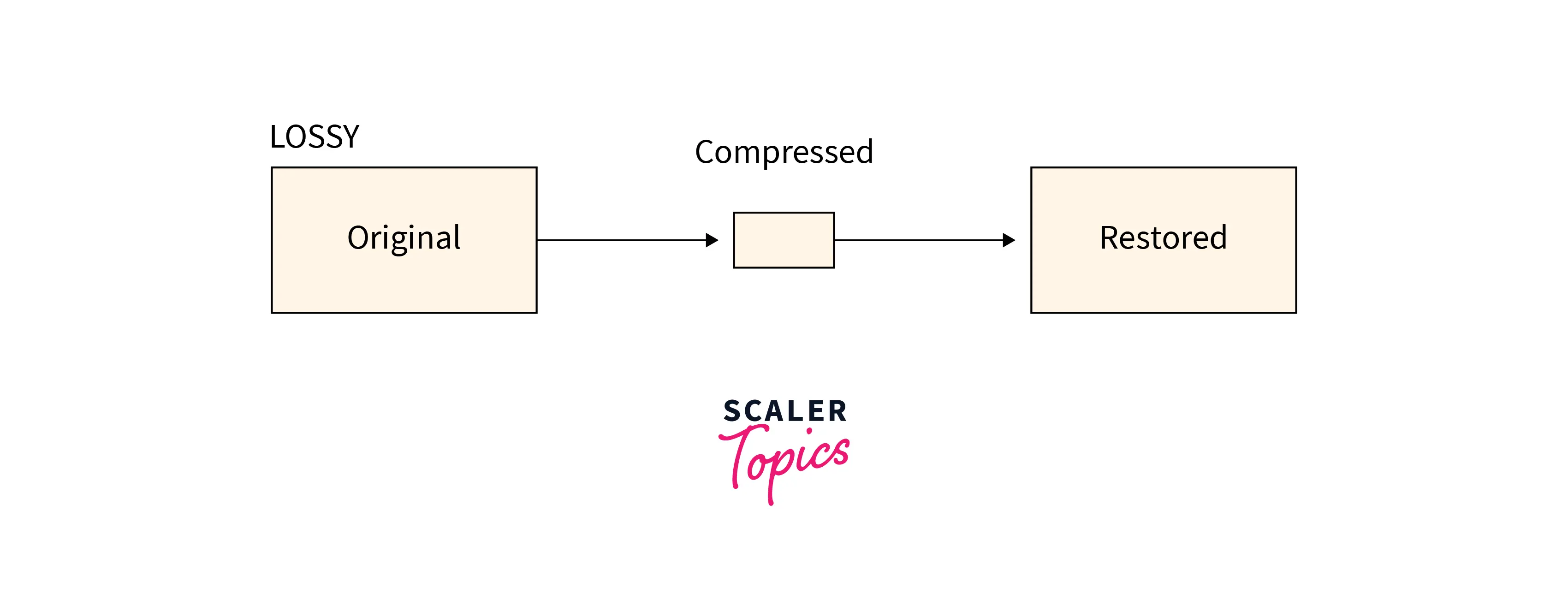

Lossless compression and lossy compression are two methods that can be used. In contrast to lossless compression, which preserves all data, lossy compression shrinks the size of the data by deleting extraneous information.

For lossless data compression of multimedia, the Shannon-Fano Algorithm is an entropy encoding method. It gives each symbol a code depending on how often it is to occur, and it bears the names of Claude Shannon and Robert Fano. The codes that are issued to the symbols will be of varying lengths because it is a variable-length encoding method.

Introduction

The act of encoding or transforming data such that it uses less memory space is known as data compression, often referred to as source coding. In order to store and transport data, data compression decreases the number of resources needed.

In the realm of data compression, two distinct but connected methods for creating a prefix code based on a collection of symbols and their probabilities are known as Shannon-Fano coding. It is named after Claude Shannon and Robert Fano.

- The binary expansion of the cumulative probability is one method frequently used to select the codewords. In Shannon’s 1948 essay “A Mathematical Theory of Communication,” which established the discipline of information theory, this approach was put out.

- The source symbols are split into two sets—”0″ and “1”—by Fano’s approach, with probability as near as feasible to one-half. Then, each of those sets is split into two, and so on, until there is only one symbol left in each set. The string of “0”s and “1”s that indicates which divides the symbol landed on is the codename for that symbol. This approach was suggested by

Fanoin a subsequent technical report (1949).

Because Huffman coding consistently achieves the shortest predicted codeword length, Shannon-Fano codes are inefficient in this regard. Although the estimated codeword length for Shannon-Fano codes is just one bit short of the ideal. The predicted lengths of the encoding produced by Fano’s approach are often shorter than those of Shannon’s method. Shannon’s approach, nevertheless, is simpler to conceptually analyze.

The ancestor of arithmetic coding, Shannon-Fano-Elias coding (sometimes called Elias coding), should not be confused with Shannon-Fano coding.

Data Compression and its Types

Reducing the size of huge files is done using this method.

Data compression has the benefit of allowing us to transmit data more quickly and with less disc space use.

There are primarily two categories of data compression methods:

- Lossless Data Compression

Without sacrificing the quality or contents of the original file, lossless data compression is utilized to compress the files. Simply said, lossless data compression results in smaller files while maintaining the same level of data quality.

The primary benefit of lossless data compression is that following decompression, we may recover the original data in its original format.

Lossless data compression is primarily employed in the PNG, RAW, GIF, and BMP file formats as well as sensitive papers and secret information.

The following are some of the most crucial Lossless data compression methods: - Run Length Encoding (RLE)

- Lempel Ziv – Welch (LZW)

- Huffman Coding

- Arithmetic Coding

- Lossy Data Compression

Larger files are compressed into smaller files via lossy data compression. This compression method involves the loss (removal) of a particular quantity of data and quality from the original file. Due to the loss of original data and quality, it consumes less memory than the original file. When the quality of the data is not our top priority, this strategy is typically helpful to us.

Several significant Lossy data compression methods include: - Transform coding

- Discrete Cosine Transform (DCT)

- Discrete Wavelet Transform (DWT)

What is Shannon Fano Coding?

In order to create a prefix code, a method known as Shannon-Fano coding, named after Claude Elwood Shannon and Robert Fano, is used. It is based on a set of symbols and the probabilities associated with them. It is suboptimal in that, unlike Huffman coding, it does not attain the shortest predicted codeword length.

The symbols are organized in Shannon-Fano coding from most likely to least likely, and then split into two groups with probabilities that are as near to equality as feasible. The initial digits of each symbol’s code are then allocated, with “0” being given to the first set of symbols and “1” to the second. The same procedure is used for each set that still has more than one member in order to calculate the sets’ subsequent code digits. It goes without saying that when a set gets down to a single symbol, its coding is complete and won’t serve as the prefix for any more symbols.

The method works, and it generates quite effective variable-length encodings; when the two smaller sets generated by a partitioning are actually of similar probability, the single piece of information needed to differentiate them is utilized most effectively. Sadly, Shannon-Fano does not always yield the best prefix codes.

Because of this, Shannon-Fano is seldom ever employed; Huffman coding is virtually as computationally straightforward and yields prefix codes that consistently attain the shortest predicted code word length. In the IMPLODE compression technique, which is a component of the ZIP file format, Shannon-Fano coding is applied when it is intended to utilize a straightforward algorithm with good performance and little programming needs.

An effective code table is defined by a specification that is used to build a Shannon-Fano tree. The actual method is straightforward:

- Create a list of probabilities or frequency counts that correspond to the supplied list of symbols in order to determine the relative frequency of occurrence for each symbol.

- Arrange the lists of symbols in ascending order of frequency, with the most frequent symbols on the left and the least frequent on the right.

- Split the list in half, keeping the frequency counts for the left half as near as feasible to those for the right.

- The binary digit 0 is allocated to the left portion of the list, while the binary digit 1 is assigned to the right portion. As a result, all of the codes for the symbols in the first portion will begin with 0, and all of the codes for the symbols in the second part will begin with 1.

- Repeat steps 3 and 4 until each symbol has a matching code leaf on the tree by subdividing groups and adding bits to the codes. This should be done for each of the two halves.

Working of Shannon-Fano Algorithm

Listed below are the steps of the algorithm:

- To determine each symbol’s relative frequency of occurrence, create a matching list of probabilities or frequency counts for the provided list of symbols.

- Arrange the lists of symbols in descending order of frequency, starting on the left with the ones that are used the most and moving rightward with the ones that are used the least.

- Split the list into two, with as much similarity as feasible between the frequency counts of the left and right sections.

- The binary digit 0, which is allocated to the left side of the list, and the binary digit 1, which is assigned to the right. For the symbols in the first section, this implies that all of the codes will begin with 0, and for the symbols in the second part, all of the codes will begin with 1.

- Apply steps 3 and 4 to each of the two halves in a circular manner, breaking the groups into smaller subgroups and introducing bits to the codes until each symbol has developed into a matching code leaf on the tree.

- Example:

- ABCDE as a symbol

- Count

A 15

B 7

C 6

D 6

E 5

Sign: ABCDE

- Code

A 00

B 01

C 10

D 110

E 111

Implementation

Implementation of the above approach:

Pseudo-code

1: begin

2: count source units

3: sort source units to non-decreasing order

4: SF-SplitS

5: output(count of symbols, encoded tree, symbols)

6: write the output

7: end

8:

9: procedure SF-Split(S)

10: begin

11: if (|S|>1) then

12: begin

13: divide S by S1 and S2 with about the same count of units

14: add 1 to codes in S1

15: add 0 to codes in S2

16: SF-Split(S1)

17: SF-Split(S2)

18: end

19: end// C++ program for Shannon Fano Algorithm

#include <bits/stdc++.h>

using namespace std;

// declare structure node

struct node {

// for storing symbol

string sym;

// for storing probability or frequency

float pro;

int arr[20];

int top;

} p[20];

typedef struct node;

// function to find shannon code

void shannon(int l, int h, node p[])

{

float pack1 = 0, pack2 = 0, diff1 = 0, diff2 = 0;

int i, d, k, j;

if ((l + 1) == h || l == h || l > h) {

if (l == h || l > h)

return;

p[h].arr[++(p[h].top)] = 0;

p[l].arr[++(p[l].top)] = 1;

return;

}

else {

for (i = l; i <= h - 1; i++)

pack1 = pack1 + p[i].pro;

pack2 = pack2 + p[h].pro;

diff1 = pack1 - pack2;

if (diff1 < 0)

diff1 = diff1 * -1;

j = 2;

while (j != h - l + 1) {

k = h - j;

pack1 = pack2 = 0;

for (i = l; i <= k; i++)

pack1 = pack1 + p[i].pro;

for (i = h; i > k; i--)

pack2 = pack2 + p[i].pro;

diff2 = pack1 - pack2;

if (diff2 < 0)

diff2 = diff2 * -1;

if (diff2 >= diff1)

break;

diff1 = diff2;

j++;

}

k++;

for (i = l; i <= k; i++)

p[i].arr[++(p[i].top)] = 1;

for (i = k + 1; i <= h; i++)

p[i].arr[++(p[i].top)] = 0;

// Invoke shannon function

shannon(l, k, p);

shannon(k + 1, h, p);

}

}

// Function to sort the symbols

// based on their probability or frequency

void sortByProbability(int n, node p[])

{

int i, j;

node temp;

for (j = 1; j <= n - 1; j++) {

for (i = 0; i < n - 1; i++) {

if ((p[i].pro) > (p[i + 1].pro)) {

temp.pro = p[i].pro;

temp.sym = p[i].sym;

p[i].pro = p[i + 1].pro;

p[i].sym = p[i + 1].sym;

p[i + 1].pro = temp.pro;

p[i + 1].sym = temp.sym;

}

}

}

}

// function to display Shannon codes

void display(int n, node p[])

{

int i, j;

cout << "\n\n\n\tSymbol\tProbability\tCode";

for (i = n - 1; i >= 0; i--) {

cout << "\n\t" << p[i].sym << "\t\t" << p[i].pro << "\t";

for (j = 0; j <= p[i].top; j++)

cout << p[i].arr[j];

}

}

// Driver code

int main()

{

int n, i, j;

float total = 0;

string ch;

node temp;

// Input the number of symbols

cout << "Enter number of symbols\t: ";

n = 5;

cout << n << endl;

// Input symbols

for (i = 0; i < n; i++) {

cout << "Enter symbol " << i + 1 << " : ";

ch = (char)(65 + i);

cout << ch << endl;

// Insert the symbol to the node

p[i].sym += ch;

}

// Input probability of symbols

float x[] = { 0.22, 0.28, 0.15, 0.30, 0.05 };

for (i = 0; i < n; i++) {

cout << "\nEnter probability of " << p[i].sym << " : ";

cout << x[i] << endl;

// Insert the value to node

p[i].pro = x[i];

total = total + p[i].pro;

// checking max probability

if (total > 1) {

cout << "Invalid. Enter new values";

total = total - p[i].pro;

i--;

}

}

p[i].pro = 1 - total;

// Sorting the symbols based on

// their probability or frequency

sortByProbability(n, p);

for (i = 0; i < n; i++)

p[i].top = -1;

// Find the Shannon code

shannon(0, n - 1, p);

// Display the codes

display(n, p);

return 0;

}Output:

Enter number of symbols: 5

Enter symbol 1 : A

Enter symbol 2 : B

Enter symbol 3 : C

Enter symbol 4 : D

Enter symbol 5 : E

Enter probability of A : 0.22

Enter probability of B : 0.28

Enter probability of C : 0.15

Enter probability of D : 0.3

Enter probability of E : 0.05

Symbol Probability Code

D 0.3 00

B 0.28 01

A 0.22 10

C 0.15 110

E 0.05 111Python:

# Python3 program for Shannon Fano Algorithm

# declare structure node

class node :

def __init__(self) -> None:

# for storing symbol

self.sym=''

# for storing probability or frequency

self.pro=0.0

self.arr=[0]*20

self.top=0

p=[node() for _ in range(20)]

# function to find Shannon's code

def shannon(l, h, p):

pack1 = 0; pack2 = 0; diff1 = 0; diff2 = 0

if ((l + 1) == h or l == h or l > h) :

if (l == h or l > h):

return

p[h].top+=1

p[h].arr[(p[h].top)] = 0

p[l].top+=1

p[l].arr[(p[l].top)] = 1

return

else :

for i in range(l,h):

pack1 = pack1 + p[i].pro

pack2 = pack2 + p[h].pro

diff1 = pack1 - pack2

if (diff1 < 0):

diff1 = diff1 * -1

j = 2

while (j != h - l + 1) :

k = h - j

pack1 = pack2 = 0

for i in range(l, k+1):

pack1 = pack1 + p[i].pro

for i in range(h,k,-1):

pack2 = pack2 + p[i].pro

diff2 = pack1 - pack2

if (diff2 < 0):

diff2 = diff2 * -1

if (diff2 >= diff1):

break

diff1 = diff2

j+=1

k+=1

for i in range(l,k+1):

p[i].top+=1

p[i].arr[(p[i].top)] = 1

for i in range(k + 1,h+1):

p[i].top+=1

p[i].arr[(p[i].top)] = 0

# Invoke shannon function

shannon(l, k, p)

shannon(k + 1, h, p)

# Function to sort the symbols

# based on their probability or frequency

def sortByProbability(n, p):

temp=node()

for j in range(1,n) :

for i in range(n - 1) :

if ((p[i].pro) > (p[i + 1].pro)) :

temp.pro = p[i].pro

temp.sym = p[i].sym

p[i].pro = p[i + 1].pro

p[i].sym = p[i + 1].sym

p[i + 1].pro = temp.pro

p[i + 1].sym = temp.sym

# function to display shannon codes

def display(n, p):

print("\n\n\n\tSymbol\tProbability\tCode",end='')

for i in range(n - 1,-1,-1):

print("\n\t", p[i].sym, "\t\t", p[i].pro,"\t",end='')

for j in range(p[i].top+1):

print(p[i].arr[j],end='')

# Driver code

if __name__ == '__main__':

total = 0

# Input the number of symbols

print("Enter number of symbols\t: ",end='')

n = 5

print(n)

i=0

# Input symbols

for i in range(n):

print("Enter symbol", i + 1," : ",end="")

ch = chr(65 + i)

print(ch)

# Insert the symbol to the node

p[i].sym += ch

# Input probability of symbols

x = [0.22, 0.28, 0.15, 0.30, 0.05]

for i in range(n):

print("\nEnter probability of", p[i].sym, ": ",end="")

print(x[i])

# Insert the value into the node

p[i].pro = x[i]

total = total + p[i].pro

# checking max probability

if (total > 1) :

print("Invalid. Enter new values")

total = total - p[i].pro

i-=1

i+=1

p[i].pro = 1 - total

# Sorting the symbols based on

# their probability or frequency

sortByProbability(n, p)

for i in range(n):

p[i].top = -1

# Find the shannon code

shannon(0, n - 1, p)

# Display the codes

display(n, p)Output:

Enter the number of symbols: 5

Enter symbol 1 : A

Enter symbol 2 : B

Enter symbol 3 : C

Enter symbol 4 : D

Enter symbol 5 : E

Enter probability of A : 0.22

Enter probability of B : 0.28

Enter probability of C : 0.15

Enter probability of D : 0.3

Enter probability of E : 0.05

Symbol Probability Code

D 0.3 00

B 0.28 01

A 0.22 10

C 0.15 110

E 0.05 111Complexity Analysis

Similar to quick sort, the time complexity runs slowly.

The following describes the recurrence relation:T(n) = T(k) + T(n-k) + Θ(n), where k and n-k are the partition sizes, and k>0.

Worst case: The divisions that are created each time can be extremely imbalanced. For instance, if the probabilities are 0.05, 0.1, 0.2, 0.5, the recurrence relation changes to T(n)=T(n-1)+T(1)+Θ(n), and T(n) ends up being $O(n^2)$.

Best case: The recurrence relation becomes T(n)=2T(n/2)+Θ(n) if the partitions can be partitioned so that the sizes are almost identical, for instance, if the probabilities are 0.2,0.25,0.25,0.3.

T(n) in this example turns out to be Θ(nlogn) (Worst Case)..

Differences between Huffman and Shannon Fano Algorithm

Huffman Coding

ASCII character data is compressed using the Huffman coding compression technique. The Huffman codes in this approach, which do not need to include prefix codes, are created from a collection of probabilities. It was created by David Huffman in 1952 while he was a student at MIT researching information theory. The technique is based on a top-down method in which a binary tree is built from the top down to produce a minimum sequence.

It performs its function by converting the characters in a data file into binary code. The binary codes for the file’s significant characters are the shortest, while those for the characters that appear the least are the longest. It is a widely used technique for lossless text compression that enables the restoration of compressed material in its original format.

Let’s now break down the algorithm using a few steps:

- The symbols or characters are arranged in the first stage in descending order by likelihood.

- The symbol or letter index should be sorted in descending order if the probability is equal.

- Then choose two symbols with the lowest probability, where the top symbol is assigned a bit value of “1” and the lower symbol is assigned a value of “0,” combine them into a new symbol and add the probability.

- Change the symbols as you did in step one.

- The newest symbol is placed beneath the older symbol if the probability is the same.

- The probability total must then equal 1.0 before repeating steps 2 and 3.

- Using binary, describe the codewords for each symbol.

Shannon Fano Coding

The Shannon Fano technique is employed to produce a code that is exclusively decodable and is comparable to Huffman coding. By Claude Shannon and Robert Fano in the year 1949, it was created before the Huffman coding scheme. Additionally, the data is encoded using this method’s usage of probability. The best possible code generation is not, however, guaranteed. It is understood to be a method for creating prefix codes in line with a set of symbols and probabilities.

In addition, these codes are referred to as Shannon codes and have codeword lengths of l(x)=[log 1/P(x)]. As was previously stated, the Kraft inequality requirement is satisfied by the Shannon codeword length, which makes it possible to produce codes that are only decoded by Shannon.

Following are the Instructions for Interpreting the Algorithm:

- The symbols must be sorted in this method by decreasing the probability of occurrence frequency.

- Sort the growing symbol index if the symbol’s frequency is the same.

- The symbols are then divided into two groups with the least amount of variation feasible.

- To assign a symbol to each group 1, repeat step 3.

- Once complete, create the code in accordance with the binary tree.

The following are the differences between the Shannon-Fano algorithm and the Huffman method:

- The outcomes of Huffman encoding are always ideal. Shannon Fano sometimes fails to attain the shortest predicted code word length, unlike Huffman coding.

- While Shannon fano coding makes use of the cumulative distribution function, Huffman coding employs prefix code conditions.

- But the Shannon-Fano method also generates prefix codes.

Conclusion

- The process of encoding data such that it uses less memory while being stored is known as data compression. Two techniques for encoding data are Huffman coding and Shannon Fano Algorithm.

- When text data is compressed using the Shannon-Fano technique, the final file size is less than when ASCII encoding is used. According to the compression ratio between ASCII and the Shannon-Fano algorithm, some data with a large size can be converted into some data with a small size.

- Data compression for ASCII characters is handled via the Huffman coding compression technique. This approach uses Huffman codes, which are created from a collection of probabilities and are not required to have prefix codes. The technique uses a top-down strategy to build a binary tree from the bottom up in order to provide a minimum sequence.

- The Shannon Fano technique is employed to produce a code that is exclusively decodable and is comparable to Huffman coding. Additionally, the data is encoded using this method’s usage of probability. The best possible code generation is not, however, guaranteed. It is understood to be a method for creating prefix codes in line with a set of symbols and probabilities.

- The Huffman coding is more effective and ideal than the Shannon fano coding two encoding techniques.

- When compared to the Huffman method, the ShannonFano algorithm is more successful than the Huffman algorithm when the data contains a long statement and the data text includes more combination characters in the statement or in string/word.